In the world of big data, moving information between transactional databases and analytical data warehouses is a common but often tricky task. The challenge lies in doing this efficiently, reliably, and without breaking the bank. If you’re looking for a way to sync data from Cloud Spanner to BigQuery without the high costs of real-time streaming, you've come to the right place. We've developed a robust batch synchronization system using a suite of Google Cloud services that ensures no records are missed or duplicated, all while keeping costs low.

This article breaks down our solution, detailing the architecture, the step-by-step process, and the key piece of code that kicks everything off.

The Architecture: A Symphony of Google Cloud Services

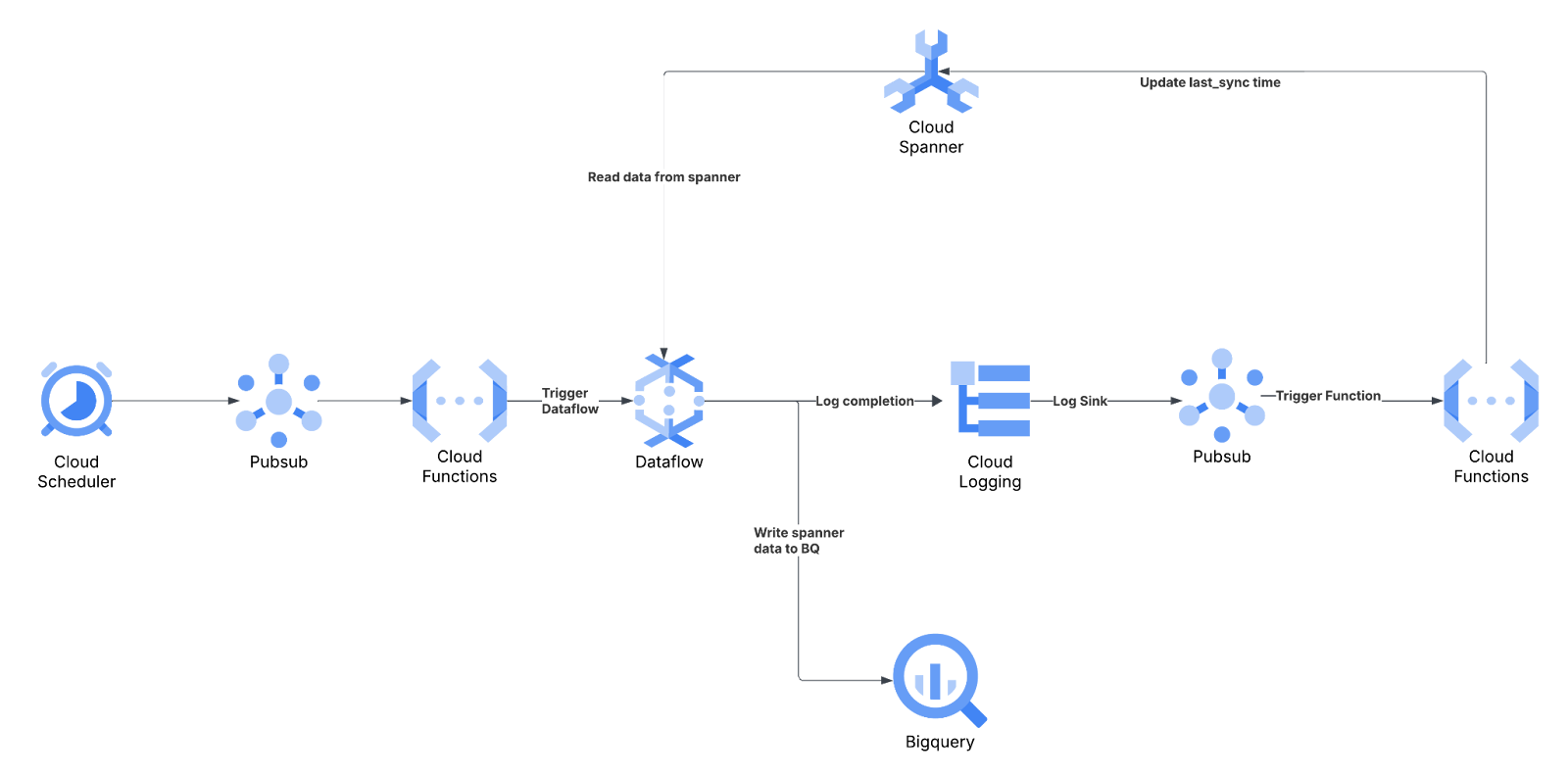

Our system leverages several powerful Google Cloud services working in concert to create a seamless and automated data pipeline. The process begins with a scheduled trigger and gracefully hands off tasks between services to ensure a reliable data transfer.

Here are the key components and their roles:

- Cloud Scheduler: This acts as the pacemaker for our system, publishing a message to a Pub/Sub topic every hour to initiate the sync process.

- Pub/Sub: This messaging service is used at two key points. First, it receives the trigger message from Cloud Scheduler. Second, it's used later to capture job completion notifications from Dataflow.

- Cloud Functions: We use two separate Cloud Functions.

- The "Trigger" Function: This function is subscribed to the initial Pub/Sub topic. When it receives a message from the scheduler, it runs code to fetch metadata and launch the main Dataflow job.

- The "Update" Function: This function listens for the job completion message from the second Pub/Sub topic and updates the

last_syncedtimestamp in Spanner after a successful run.

- Cloud Spanner: This is our source transactional database. It holds the raw data and a crucial

sync_metadatatable that tracks the timestamp of the last successful sync. - Google Cloud Dataflow: This is the workhorse of our operation, running a batch processing job to pull only new records from Spanner using an SQL query. We use Google-provided Flex Templates for easier management.

- BigQuery: This is our destination data warehouse, where the data from Spanner is loaded in batches for large-scale analysis and reporting.

- Cloud Logging: This service captures the status logs from our Dataflow job, which are then routed to Pub/Sub to signal that the job is complete.

How It Works: A Step-by-Step Breakdown

The elegance of this system is in its straightforward, repeatable workflow, which ensures data integrity at every stage.

- Scheduled Trigger: Once every hour, Cloud Scheduler publishes a message to a Pub/Sub topic.

- Job Initiation: A Cloud Function, triggered by the Pub/Sub message, begins the main process. It fetches the

last_syncedtimestamp from Spanner to determine the starting point for the data pull.- Dataflow Execution: The Cloud Function launches a Dataflow job, passing in a custom SQL query:

SELECT * FROM {TableName} WHERE created_at > '{last_synced}'. This ensures only new records are extracted. - Batch Loading: The Dataflow job efficiently loads the new data into BigQuery using batch inserts, a method far more cost-effective than streaming.

- Completion Notification: Once the Dataflow job finishes, Cloud Logging captures this event and a log sink forwards a notification to a second Pub/Sub topic.

- Confirmation and Update: The second Cloud Function, listening to this topic, is triggered. It performs a final, critical check by querying BigQuery for the maximum

created_attimestamp among the newly loaded records (MAX(created_at)). This timestamp is then written back to thesync_metadatatable in Spanner as the newlast_syncedvalue, preventing data loss in the next cycle.

Why This Batch Approach Wins

For many applications, real-time data streaming is overkill. This batch processing system offers several key advantages:

- Cost-Effectiveness: Batch loading into BigQuery is dramatically cheaper than streaming inserts.

- Data Integrity: The

last_syncedandMAX(created_at)logic creates a reliable system that prevents data loss or duplication. - Simplicity and Automation: By using managed services like Dataflow Flex Templates, Cloud Scheduler, and Cloud Functions, the system is easy to maintain and runs automatically.

By orchestrating these powerful Google Cloud tools, we've created a data synchronization pipeline that is not only robust and reliable but also remarkably efficient. It’s a perfect solution for anyone needing to move large datasets from Spanner to BigQuery for analysis without incurring the high cost and complexity of a real-time streaming architecture.

.png)